网站已经不能访问了~~

'''

https://ganlinmu.live/index.php/vod/type/id/1/page/86.html

'''

import hashlib

import lxml as lxml

import requests

import base64

import random

import json

import time

import os

from bs4 import BeautifulSoup as bs

from lxml import etree

import re

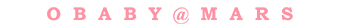

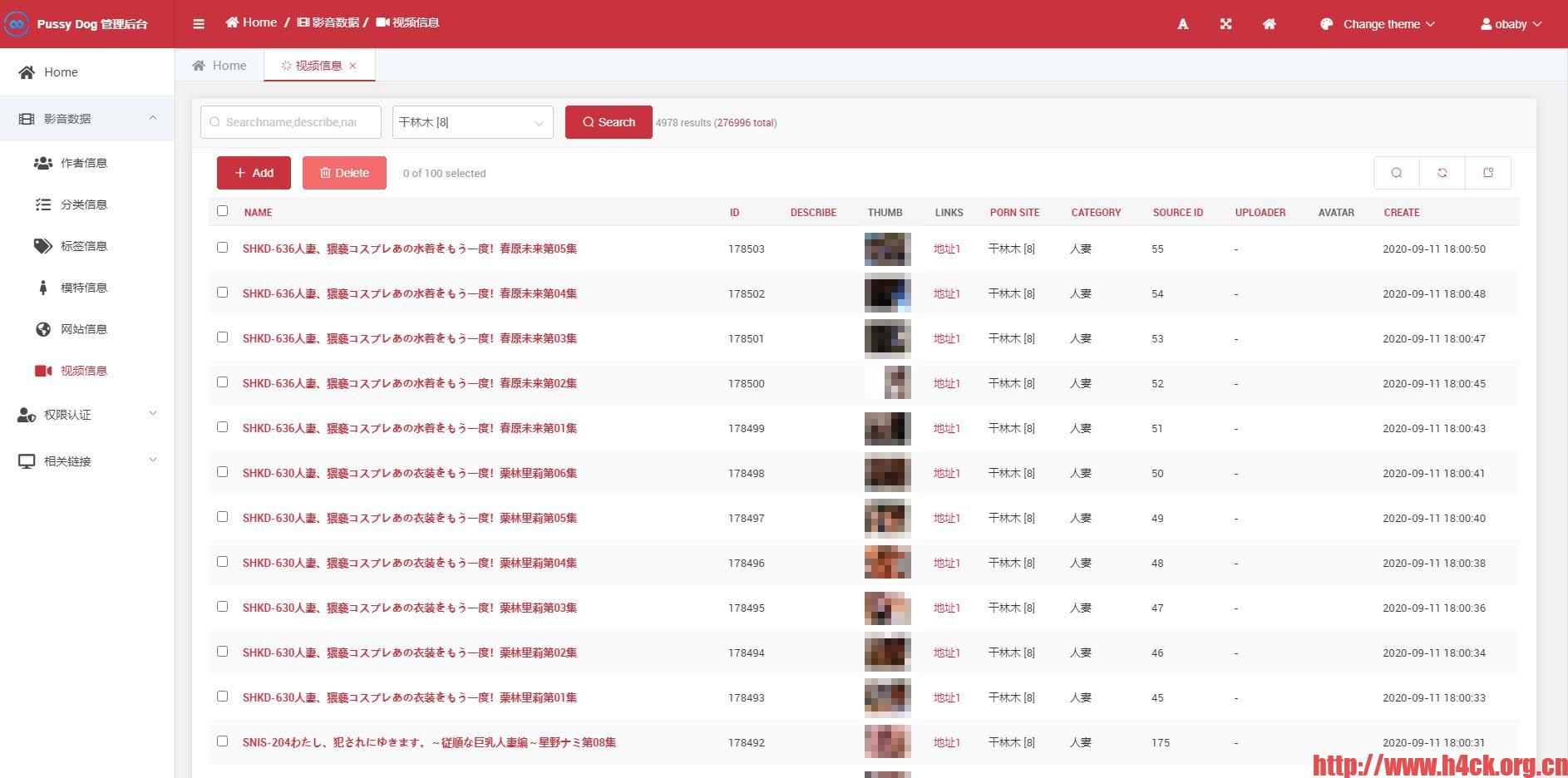

pussy_dog_host = 'http://192.168.1.2:8009'

cookie = '__cfduid=db71863e7b71a23a629f3d36449081bf21599788426; PHPSESSID=cl04b4419gl452irrh17tik4ij; kt_tcookie=1; kt_is_visited=1; HstCfa4385406=1599788433962; HstCmu4385406=1599788433962; kt_ips=2408%3A8215%3Ae18%3A5330%3A%3A19%2C112.225.215.52; HstCnv4385406=2; HstCns4385406=3; kt_qparams=category%3Dry; HstCla4385406=1599801632513; HstPn4385406=12; HstPt4385406=19'

site_id = 8

class NoPorn(object):

failed_count: int

def __init__(self):

self.header = {

'Host': 'ganlinmu.live',

'User-Agent': 'Mozilla/5.0 (Macintosh; Intel Mac OS X 10_15_6) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/85.0.4183.102 Safari/537.36',

'Connection': 'Keep-Alive',

'Accept-Encoding': 'gzip',

}

self.host = 'https://ganlinmu.live/'

self.failed_count = 0

#

cat_list = [

{'url': self.host + 'index.php/vod/type/id/1/', 'key': 'gan1', 'name': '日韩'},

{'url': self.host + 'index.php/vod/type/id/2/', 'key': 'gan2', 'name': '乱伦'},

{'url': self.host + 'index.php/vod/type/id/3/', 'key': 'gan3', 'name': '欧美'},

{'url': self.host + 'index.php/vod/type/id/4/', 'key': 'gan4', 'name': '国产'},

{'url': self.host + 'index.php/vod/type/id/5/', 'key': 'gan5', 'name': '人妻'},

]

self.cat_list = cat_list

def send_data(self, post_data):

# if self.failed_count > 5:

# print('[E] Pussy dog 服务器多次链接失败,退出进程')

# import os

# os._exit(0)

url = pussy_dog_host + '/add-movie/'

try:

response = requests.post(url, json=post_data,

timeout=10)

print('[R] ', response.text)

except:

print('[F] 添加数据失败')

print('*' * 100)

def check_exists(self, pk):

try:

url = pussy_dog_host + '/check-movie-exists/'

response = requests.post(url, json={'id': pk, 'site_id': site_id},

timeout=10).json()

print('[C] ', response)

if response['status'] == 1:

return True

else:

return False

except:

return False

def update_girl_avatar(self, chinese_name, pk, avatar):

try:

url = pussy_dog_host + '/update-model-avatar/'

response = requests.post(url, json={'id': pk, 'avatar': avatar, 'chinese_name': chinese_name},

timeout=10).json()

print('[C] ', response)

if response['status'] == 1:

return True

else:

return False

except:

return False

def http_get(self, url):

try:

response = requests.get(url, headers=self.header, timeout=10).text

except Exception as e:

print(e)

import time

time.sleep(10)

try:

response = requests.get(url, headers=self.header, timeout=10).text

except:

return None

return response

def get_m3u8_link(self, url):

print('_' * 70)

print('[A] 解析播放地址......')

html_doc = self.http_get(url)

soup = bs(html_doc, "html.parser")

pattern = re.compile(r"var player_data={(.*?);$", re.MULTILINE | re.DOTALL)

player = soup.find('div', class_='myplayer')

surls = player.find('script')

# print(surls)

js_string = str(surls).replace('', '')

print(js_string)

json_data = json.loads(js_string)

m3u8_link = json_data['url']

title = soup.title.string

print('[A] 标题:' + title)

print('[A] 播放地址:' + m3u8_link)

print('_' * 70)

return m3u8_link, title

def get_item_detail(self, i, cat):

print('-' * 150)

print('开始解析视频信息')

title = i.find('h4').get_text()

title = str(title).replace(',', ' ').replace('\r', '').replace('\n', '').replace('\t', '')

print('标题:', title)

video_url = i.find('a', class_='uzimg')['href']

video_id = str(video_url).split('/')[5]

print('视频编号: ', video_id)

img_url = i.find('img')['data-original']

cover_image_url = i.find('img')['src']

print('IMG1: ', img_url)

print('IMG2: ', cover_image_url)

play_url = self.host + video_url

m3u8_url, t = self.get_m3u8_link(play_url)

print('播放地址:', m3u8_url)

if self.check_exists(video_id):

print('视频已经存在,跳过')

print('视频已经存在,跳过')

self.failed_count += 1

return

msg = {

'id': video_id,

'title': title,

'thumb': img_url,

'thumb_raw': img_url,

'preview': img_url,

'site_id': site_id,

'video_link1': m3u8_url,

}

if cat is not None:

msg['key'] = cat['key']

msg['category'] = cat['name']

self.send_data(msg)

print('_' * 150)

def get_cat_page_detail(self, page_url):

html_content = self.http_get(page_url)

# print(html_content)

soup = bs(html_content, 'html.parser')

ct = soup.find('div', class_='myvod')

items = ct.find_all('li')

for i in items:

cat = self.get_url_cat_info(page_url, self.cat_list)

self.get_item_detail(i, cat)

def get_cat_all_page_count(self, html_content):

# print(html_content)

soup = bs(html_content, 'html.parser')

page_count_paramter = soup.find('div', class_='mypage')

last_page_url = page_count_paramter.find_all('a')[-1]['href']

page_count = str(last_page_url).split('/')[-1].split('.')[0]

print('当前分类页数:', page_count)

return int(page_count)

def get_all_cat_list(self, html_content):

soup = bs(html_content, 'html.parser')

page_count_paramter = soup.find('ul', class_='list')

cat_urls = page_count_paramter.find_all('a')

cl = []

for c in cat_urls:

full_name_with_rank = c.get_text('|')

# print(full_name_with_rank)

name = str(full_name_with_rank).split('|')[0]

url = c['href']

# print(url)

# cat_name = c.stripped_strings[0]

# print(cat_name)

key = str(url).split('/')[-2]

# print(key)

cd = {

'url': url,

'key': key,

'name': name,

}

cl.append(cd)

print('全部分类:', cl)

return cl

# 根据路径获取分类信息

def get_url_cat_info(self, url, ul):

for u in ul:

if u['url'] in url:

return u

return None

if __name__ == '__main__':

# print('main')

nop = NoPorn()

# html_content =nop.http_get('https://ganlinmu.live/index.php/vod/type/id/1/')

# page_count = nop.get_cat_all_page_count(html_content)

#

# nop.get_cat_page_detail('https://ganlinmu.live/index.php/vod/type/id/1/')

# print(nop.cat_list)

# exit()

for c in nop.cat_list:

nop.failed_count = 0

print('*' * 200)

print('处理分类:', c['name'])

html_content = nop.http_get(c['url'])

page_count = nop.get_cat_all_page_count(html_content)

start_page = 1

if 'start' in c.keys():

start_page = c['start']

for i in range(start_page, page_count + 1):

print('~' * 160)

print('页码:', i)

print('分类:', c['name'])

if nop.failed_count > 15:

print('多次检测到重复视频,结束分类爬取,切换到下一分类')

break

page_url = c['url'] + 'page/' + str(i) + '.html'

nop.get_cat_page_detail(page_url)